Grimage

From Digitalis

Contents |

Overview

The Grimage cluster was originally dedicated to support the Grimage VR platform: handle hardware (cameras, etc) and process data (videos captures, etc).

More recently, 10GE ethernet cards were added to some nodes for a new project, making the cluster a mutualized platform (multi-project). Currently, at least 4 projects are using the cluster, requiring a resource management system and deployment system adapted to an experimental platform, just like Grid'5000.

Grimage nodes have big computer cases (4U), with the purpose of being able to host various hardware.

- By design, the hardware configuration of the Grimage nodes is subject to changes

- new generation of video (GPU) cards may be installed over time

- 10GE network connections may change

- ...

Updates

- 2014-05

Intel 10GE cards are removed from the nodes to be used in the ppol nodes.

- 2015-03

The grimage machines in the F110 machine room have to be shut-down to lower noises in the next room. As a result grimage-1 to 8 are not available anymore.

Grimage-9 and Grimage-10 which are hosted in the F212 room are still available.

- 2015-08

A Nvidia Tesla K40c GPU is installed in grimage-9. Grimage-10 now has 2 Geforce GTX295 = 4 GPUs.

Machines

| Machine | CPU | RAM | GPU | Network | Other |

| grimage-9.grenoble.grid5000.fr | 2x Intel Xeon E5620 (16 cores) | 24GB DDR3 | IB DDR | ||

| grimage-10.grenoble.grid5000.fr | 2x Intel Xeon E5620 (16 cores) | 24GB DDR3 | 2x GTX-295 (4GPU) | IB DDR |

Some references to the models of GPUs:

How to experiment

The default system of the grimage node is design to operate the Grimage VR room.

Using kadeploy is required to adapt the system to other needs (if the default system is not sufficient).

Privileged commands

Currently, the following commands can be run via sudo in exclusive jobs:

- sudo /usr/bin/whoami (provided for testing the mechanism, should return "root")

- sudo /sbin/reboot

- sudo /usr/bin/schedtool

- sudo /usr/bin/nvidia-smi

What is x2x and how to use it

This tip is useful for people that have to work in the Grimage room, with a screen attached to a Grimage machine.

x2x allows to control the mouse pointer and keyboard input of a remote machine over the network (X11 protocol). In the case of the Grimage nodes which have a screen attached, it is very practical because it allows to not use the USB mouse and keyboard, which are sometime buggy (because of the out of norm USB cable extension).

To use x2x:

- login locally on the machine (gdm)

- run

xhost +to allow remote X connections. - from you workstation: run

ssh pneyron@grimage-1.grenoble.g5k -X x2x -to grimage-1:0 -west

- NB

- replace pneyron by your username

- replace the 2 occurences of grimage-1 by the name of the Grimage node you actually use.

- make sure you get the ssh configuration to get the *.g5k trick to work (see the tip above)

10GE network setup

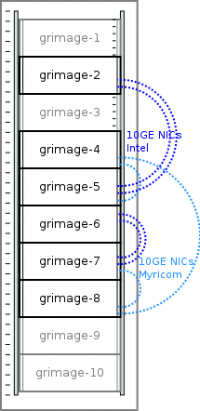

- One Myricom dual port card is installed on each of grimage-{4,5,7,8}

- One Intel dual port card is installed on each of grimage-{2,5,6,7}

Connexions are point to point (NIC to NIC, no switch) as follows:

- Myricom: grimage-7 <-> grimage-8 <-> grimage-4 <-> grimage-5

- Intel: grimage-2 <=> grimage-5 et grimage-6 <=> grimage-7 (double links)

Crash course

To book a single grimage machine, grimage-10 for instance.

Normal (no deploy) jobs

- book the machine for 4 hours, to run a script

[pneyron@digitalis ~]$ oarsub -l node=1,walltime=4 -p "host like 'grimage-10.%'" /path/to/my/script

- book the machine for 4 hours, for an interactive session

[pneyron@digitalis ~]$ oarsub -l node=1,walltime=4 -p "host like 'grimage-10.%'" -I

- get an exclusive access

[pneyron@digitalis ~]$ oarsub -l node=1,walltime=4 -p "host like 'grimage-10.%'" -t exclusive /path/to/my/script

Deploy jobs

For changing the OS (install specific software, newer versions. etc)

- book the machine for 2 hours, for changing the OS

[pneyron@digitalis ~]$ oarsub -l node=1,walltime=2 -p "host like 'grimage-10.%'" -t redeploy "sleep 4h"

- Look at the jobs

[pneyron@digitalis ~]$ chandler

- deploy

[pneyron@digitalis ~]$ kadeploy3 -m grimage-10.grenoble.grid5000.fr -e idALL-default -u root -k

- get access to the unix console of the machine (mostly useful for low level debuging, e.g. seeing boot/kernel messages)

[pneyron@digitalis ~]$ kaconsile -m grimage-10.grenoble.grid5000.fr

- ssh to the machine as root

[pneyron@digitalis ~]$ ssh grimage-10 -l root

- modify the operating system of the machine

...

- save the modifications

root@grimage-10:~# tgz-g5k /tmp/idALL-my.tgz

- then from the frontend

[pneyron@digitalis ~]$ scp root@grimage-10:/tmp/idALL-my.tgz ~/

- create the environment file

[pneyron@digitalis ~]$ cat <<EOF > idALL-my.env --- name: idALL-my version: 20150925 description: my env author: ... visibility: shared destructive: false os: linux image: file: $HOME/idALL-my.tgz kind: tar compression: gzip postinstalls: - archive: /var/lib/deploy/environments/postinstalls/idall-default-postinst.tgz-20141216-1 compression: gzip script: run /rambin boot: kernel: /vmlinuz initrd: /initrd.img filesystem: ext4 partition_type: 131 multipart: false

- then, instead of starting over for next deployments, do

[pneyron@digitalis ~]$ kadeploy3 -m grimage-10.grenoble.grid5000.fr -a ~/idALL-my.env -k

System changelog

- 2015-03

Grimage-1 to grimage-8 are out of service (machine room shutdown). Grimage-9 and -10 which are not located in the grimage machine room are still operational.

- 2015-01-25

Grimage machines now use the idALL system environment (same as idgraf and idfreeze).

Acknolegment

The grimage machines were funded by the Grimage project.